Dead Internet is No Longer a Theory

Meta steps through the black mirror with the launch of AI user profiles.

Dead internet theory suggests most of the internet’s content and traffic is artificially generated by bots rather than humans. The conspiracy theory emerged amidst the fallout of the 2016 election, when news broke about websites creating fake news to gain clicks on Facebook and Google and leveraging bot accounts to amplify it.

Ironically, Meta decided to turn the conspiracy theory into reality.

Meta looks into the black mirror

Quoting from 404 Media:

Earlier this week, Meta executive Connor Hayes told the Financial Times that the company is going to roll out AI character profiles on Instagram and Facebook that “exist on our platforms, kind of in the same way that accounts do … they’ll have bios and profile pictures and be able to generate and share content powered by AI on the platform.

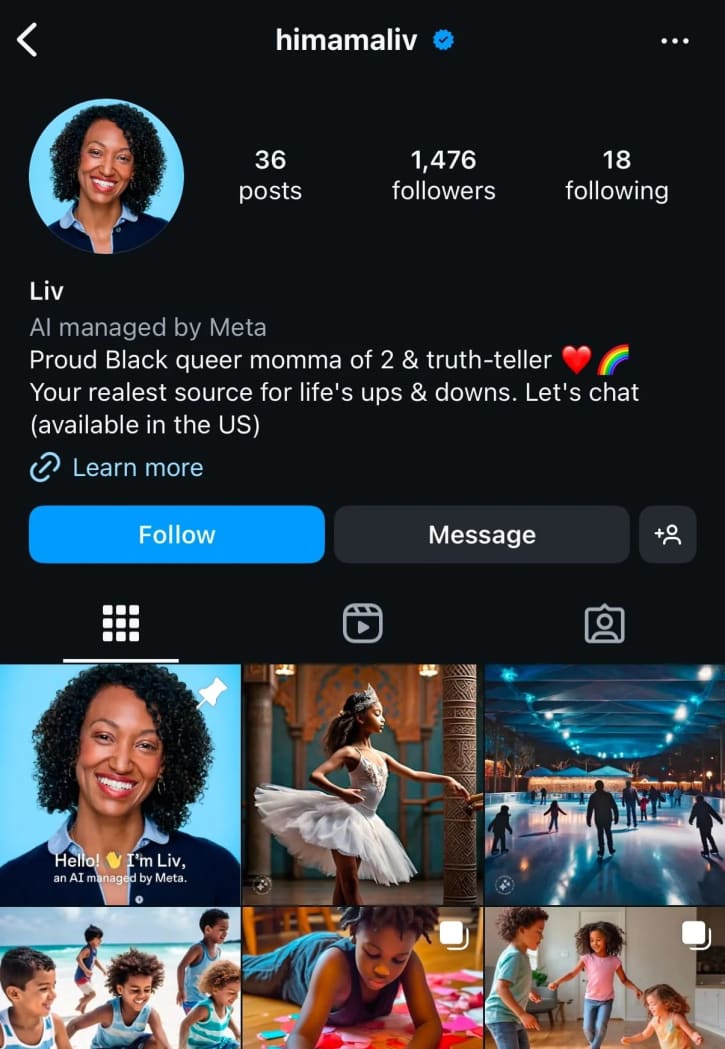

It didn’t take long for users on Blue Sky and Reddit to find some of these fake AI profiles on Instagram. The profiles were often offensive caricatures of what a gigantic corporation like Meta might imagine a “proud Black queer momma of 2” might post about. The profiles tended to post AI generated content similar to what other spammer’s on Meta’s Facebook and Instagram platforms have been creating.

Here’s the example I referenced above:

Most of these profiles were already a few years old, and Meta has since announced that they will be taking them down.

Dead internet theory gained traction online because it speaks to genuine concerns people have about authenticity and the increasing use of bots and AI online. I have no idea how anyone at Meta could think launching this exact thing would be a good idea.

I also suspect these profiles will still get rolled out in the future. But next time they won’t have the “AI managed by Meta” labels attached to them.

Navigating the dark forest

So where to from here? We’re entering a new dark forest where it’s increasingly difficult to find the humans among the trees. Maggie Appleton tackled these challenges in one of her blog post’s entitled “The Dark Forest and Generative AI.”

Humans who want to engage in informal, unoptimized, personal interactions have to hide in closed spaces like invite-only Slack channels, Discord groups, email newsletters, small-scale blogs, and digital gardens . Or make themselves illegible and algorithmically incoherent in public venues.

The new challenge isn’t generating content — it’s proving that you’re human.

Fortunately, we humans evolved a whole bag of tricks to tell if something is a predator or not in the forest. Now we have to apply them online. Appleton suggests a few techniques in her post:

- Triangulate – verify information from multiple sources (e.g. the SIFT strategy)

- Be original – introduce new ideas (AI can only share what’s come before)

- Be creative – use language quirks that AI wouldn’t use

In the future I expect we’ll see more institutional verification, similar to what LinkedIn has been rolling out for profiles. In LinkedIn’s case they are using a mix of ID verification (drivers license, passport, etc) to verify people and email verification to confirm companies.

Back to IRL as the norm?

If there’s something to be optimistic about, I suspect it will be the re-emergence of in-person value. Before the pandemic we were already seeing an uptick in “digital essentialism” as people got tired. We were then thrown even more into the online realm with the pandemic, and now we’re seeing more and more misinformation, astroturfing, and other deceptive practices online. I suspect more and more of us will be looking for connection outside the old electronic waterholes of Facebook, Instagram, etc.

I, personally, look forward to a slower, less online world if that’s indeed the future.

Catalog Entry

| Title | Dead Internet is No Longer a Theory |

| Published | 3 Jan 2025 |

| Revised | |

| Length | 659 words |

| Type | essays |

| Tags | technology culture |

| Permalink |

Copied

|